MEFANET Journal 2014; 2(2): 41-50

ORIGINAL ARTICLE

Measuring the impact of information literacy e-learning and in-class courses via pre-tests and post-test at the Faculty of Medicine, Masaryk University

Jiří Kratochvíl*

Masaryk University Campus Library, Brno, Czech Republic

* Corresponding author: kratec@ukb.muni.cz

Abstract

Article history:

Received 10 February 2014

Revised 10 July 2014

Accepted 2 October 2014

Available online 8 October 2014

Peer review:

Jarmila Potomková, Chris Paton

Download PDF

Introduction: This paper aims to evaluate the results of the assessment and comparison of the impact of information literacy in e-learning and in-class courses at the Faculty of Medicine, Masaryk University, Czech Republic. The objective herein is to show that e-learning can be as effective a method of teaching IL activities as in-class lessons.

Methods: In the autumn of 2012 and the spring of 2013, a total of 159 medical students enrolled in the e-learning course and completed the required pre-tests and post-tests comprising 30 multiple-choice questions on information literacy topics; another 92 PhD students from in-class courses took the 22-question test. The pre-test and post-test scores along with the number of students who correctly answered the questions were counted and the overall percentage was calculated. The final outcome was the extent of knowledge increase and the number of students with correct answers, expressed in percentage.

Results: On average, 95.5% and 92.5% increase in knowledge was recorded among the medical students and PhD students respectively; an average of 4.5% medical students and 7.5% of PhD students recorded low scores in the post-test. As for the number of correct answers, the average results of the 22 set questions shared among the study groups were as follows: 15 questions were answered correctly more often by medical students, 6 were answered correctly more often by PhD students and only 1 question was correctly answered in the same average percentage by both the groups.

Discussion: The results point to the need for proposing several key revisions. Among these include an exercise to be included in both curricula on online search for an article (Web of Science or Scopus) without full text availability via link service, while instructions on manually creating bibliographic references shall be added to the PhD course. Additional search examples shall be added to the study materials and video records of in-class lessons shall be made available to the students for later revision. Some test questions require revision so that they are based more on practical examples rather than mere definitions. The results thus assembled, and the follow-up discussion, can then help in convincing the advocates of in-class teaching of the beneficial application of e-learning in information literacy education. Additionally, arguments based on such convincing outcomes can assist other librarians in their assessments and will serve to persuade the associated academic staff of similar professional competence towards educating university students in information literacy.

Keywords

Czech Republic; educational measurement; e-learning; information literacy; libraries; medical schools; teaching

Introduction

Although information literacy (IL) has been an essential part of university curriculum for almost 40 years [1] and various studies have heralded the library as a significant partner for the academic staff in IL activities [2–5], the Czech IL did not receive serious attention until the 1990s. The first specialized Information Education and Information Literacy Working Group (IVIG) was established as recently as early in the 21st century [6], and its main goal was to highlight the need for and the promotion of implementing IL courses at Czech universities. Currently, IVIG has turned its attention to assessing the outcomes of information literacy programmes [6]. Since 2011, IVIG has focused on assessing the outcomes via the hitherto successfully applied pre-test and post-test methods [7–12] as well as recognising them as an accepted method according to the Standards for Educational and Psychological Testing [13]. For the period 2011-2012, IVIG organised three seminars where librarians from various Czech universities created pre-test and post-test questions and discussed the technical testing options of standardising the assessment procedure at Czech university libraries.

The Masaryk University Campus Library (MUCL) was one of the initiators and an active participant of IVIG seminars. Since the autumn of 2011, measurements in assessing the differences in the knowledge levels of students of the Faculty of Medicine (Masaryk University) for the purposes of verifying the efficiency of MUCL’s e-learning course, VSIV021 Information literacy, has been realised. During this period, a similar measurement concerning the PhD students of the same faculty who completed MUCL’s in-class DSVIz01 course (Acquisition of Scientific Information) was also conducted. The DSVIz01 course is taught in the classical F2F form (three 2.5-hour lessons, 5 credits) as an optional course because not all PhD students are PC literate. Lessons always comprised a combination of lectures, instructions and practical tasks. The VSIV021 course was taught in the spring of 2008 as an in-class course; however, since autumn 2008 it is taught as an e-learning (10 weeks, 4 credits) course pursuant to its optional status.

Both courses are focused on essential IL knowledge and skills according to international and Czech information literacy strategies [15-16]. However, DSVIz01 does not include some topics and activities (Table 1) because PhD students acquired this knowledge and the skills from previous studies as well as from their professional and personal lives. The e-learning VSIV021 course is embedded in the Masaryk University Learning Management Sytem (MU LMS), inclusive of an interactive syllabus with the study materials (online tutorials, PDF documents, textbook and videos). For the purpose of the assessment, study materials were almost identical for both groups of students. The MUCL website (http://www.ukb.muni.cz/kuk/vyuka/materialy) includes most of the online tutorials that are available to medical students as part of the interactive syllabus. It was also recommended to both groups that they learn from the textbooks designated for the courses [16]. Two videos were available only to medical students as they contained recordings of MUCL librarian's lessons attended by PhD students (publication and citation ethics, scientific writing). Even though the medical and PhD students could theoretically have learned from the online tutorials before the course, there were no significant increase in clicks on these links which satisfied the MUCL on the the precondition that the said students did not avail these tutorials prior to the commencement.

Table 1. The DSVIz01 and VSIV021 contents according to general IL standards

|

Module Objectives |

VSIV021 |

DSVIz01 |

|

Knowledge of general library terminology and services |

x |

– |

|

Search in online catalogue |

x |

– |

|

Recognize the quality of a website |

x |

– |

|

Identify keywords, synonyms and related terms |

x |

x |

|

Construct a search strategy |

x |

x |

|

Select appropriate database for finding information |

x |

x |

|

Search in online databases |

x |

x |

|

Access fulltext articles via linking service |

x |

x |

|

Read a text and write an abstract or an annotation |

x |

x |

|

Structure of thesis/paper |

x |

x |

|

Text formatting |

x |

– |

|

Publication and citation ethics |

x |

x |

|

Cite documents according to various citation styles |

x |

x |

|

Use reference managers |

x |

x |

|

Scientometry |

x |

x |

Since the inception of the courses, the content and teaching methods have been repeatedly evaluated positively by the participants [17]. However, there is no evidence to date suggesting the real impact of the courses on the range of students’ knowledge. Therefore, three basic questions [18] were asked: 1) What do I want to measure? Answer: The degree of difference between the students’ knowledge at the beginning and at the end of the courses. 2) Is this the best way to assess? Answer: Yes, it is. Previous studies have shown that the pre-test and post-test method can be used for this research and that it is a simple method for evaluating [5,7,18–24], while other methods not unsuited to the objectives and opportunties of the research bear the following: Interviewing is time consuming and requires combination of various methods [23], quiz games are recommended for young learners [18] and self-assessment is limited and students’ self-perception can result in overrating themselves [20]. 3) Is what I am testing important or significant? Answer: Yes, it is because it can a) show the effectiveness of IL courses, b) help persuade the academic staff that the librarian is capable of teaching IL topics, c) reveal students’ weaknesses and accordingly modify the instructions towards emphasizing on topics they are having difficulty with, and d) show that e-learning can be as effective as in-class teaching. For the above reasons, the pre-test and post-test method was selected for our assessment. Additionally, tests containing questions on students’ knowledge instead of students’ opinions, previously as self-assessments in other studies [20], were chosen for objective results.

This contribution has two main goals: 1) to present the results of measurements over two semesters showing increase in knowledge after completing the courses, and 2) to thereby demonstrate that e-learning IL courses can be as effective as face-to-face (F2F) IL courses.

Methods

Given the fact that several standardised surveys exist, such as the Information-Seeking Skills Test, Standard Assessment of Information Literacy Skills or iSkills [25–26], and other researches [5,8,12,21,27], the MUCL tests have been prepared to meet the particular specifics of DSVIz01 and VSIV021 course contents including test methods as discussed in IVIG seminars. Testing is managed in MU LMS, which also includes the ROPOT (Revision, Opinion Poll and Testing) tool making it possible to generate online tests from different sets of questions. Trial tests involving medical and PhD students were conducted in autumn 2011 and spring 2012 comprising online pre-test and post-test questions. The results of the trials have been excluded from this paper since the tests were used to verify the suitability of the pre-test and post-test method and eliminate any potential errors. The outcomes also served as an opportunity for proposing additional questions at IVIG seminars. An additional advantage of the trial period was the possibility of rendering a comparison between the two different groups of students (undergraduate and postgraduate). The trial test results showed (as do the results presented in this paper) similar knowledge level of the medical and PhD students prior to completing the courses. The comparison between undergraduate and postgraduate students is in line with the research by Kate Conway [28], highlighting the lack of publications on such analogy, even though the obtained results can help the academic staff and the libraries to identify the IL areas in which their students lack necessary knowledge. Finally, a comparison of these groups was possible since no PhD student participated in VSIV021 during their previous undegraduate study; hence, they were assumed to have knowledge level similar to that of the undergraduates.

The tests comprised of questions that duly addressed the module objectives of DSVIz01 and VSIV021 (Table 1) and were based on the above mentioned standards. Most of the questions were compiled at IVIG seminars. The post-tests included questions that were dissimilar to the ones in the pre-tests; however, the questions always assessed the same knowledge level of a single IL topic (Table 2). This avoided the risk of students recalling the correct answer from the pre-test when sitting for the post-test (after the pretest, students could view their results to see what they should study most carefully in the courses). This method follows the previous studies the authors of which also needed to use questions relating to their course contents [8,12,21,25–26,29].

Table 2. Example of set of pre-test and post-test questions on the topic “Searching”

|

Pre-test questions |

Post-test questions |

|

Select a phrase you would use when searching for information about aromatherapy at childbirth:

|

Select a phrase you would use when searching for information on the ethical aspects of surrogacy:

|

|

Select a phrase you would use when searching for information only about Thermography in sports medicine:

|

Select a phrase you would use when searching for information only about Magnetic resonance in medicine:

|

As mentioned above, testing was managed in ROPOT allowing for various question formats, such as multiple-choice, true/false, matching, fill-in-the-blank, short answer, etc. Recommendations [26] from IVIG seminars led to the possibility of including different formats for questions measuring the same knowledge level. Therefore, several sets of different wordings for questions (Table 2) inquiring the same knowledge level in the pre-test and post-test variants for each IL topic have been prepared. All questions are presented in the multiple-choice form, most allowing for only one correct answer and only a few with two or more correct answers. All questions included the option “I don’t know” to eliminate the risk of guessing the correct answer and skew the results.

After preparing the sets of questions, the so-called ROPOT description was compiled. This is an application for configuring test parameters; for example, it allows one to configure how many questions are to be generated and from which sets of questions (e.g. only one question from a set of three questions on a concrete topic). ROPOT also allows the configuration of the instances a student can access the content and the time consumed to answer a question, how many points are awarded for correct answers, etc. The ROPOT description was configured to generate online pre-tests and post-tests containing questions on the topics described in Table 1, excepting questions on the above mentioned topics of the VSIV021 and DSVIz01 courses.

In the autumn of 2012 and the spring of 2013, a total of 159 medical students and 92 PhD students completed the online pretests and posttests at the beginning and end of the semester, respectively. The e-learning groups of medical students as well as the in-class group of PhD students were asked (via e-mail, library and course websites) to complete the online test electronically. The text notifying the students of the test included a highlighted notice saying that the results of tests a) have no influence on the final classification, b) are only used to compare the difference in students’ knowledge at the beginning and at the end of the courses, c) will assist in recognising the topics that require further emphasis. This notification was designed to send the message to the participating students that even though they shall be completing the tests without the assistance of the MUCL librarians, they have no reason to cheat (the fact that the students did not cheat is justified in the discussion section).

The tests for medical students contained 30 questions while the tests for PhD students comprised 22 questions (this difference relates to the slightly different course content as mentioned above). Each question was worth one point; in the case of questions with two or more correct answers one point was divided pro rata according to the number of possible answers. When the students completed the pre-test and the post-test, MU LMS automatically counted the points and saved the final results in an online notepad where the student name and the points tally could be seen. In the MU LMS section called Answer Management, information on how many students answered each question was stored. The results ranked only those students who completed the pre-test and the post-test. The final evaluation of the results was based on simple descriptive statistics, presenting the findings in percentages pursuant to the method used and accepted in previously published studies [5,8,12,27,29]. This method is also suitable for other librarians preparing their own research and allows simple evaluation of the results regardless of the conditions in which librarians operate (e.g. no statistical support in the library). Under these conditions, the following hypothesis could be verified:

Hypothesis 1: The students of both courses will have higher scores in the post-test than in the pre-test.

The number of points gained in the pre-test and post-test were collected from MU LMS notepads and the difference was counted and then transferred into data showing percentage increase in the students’ knowledge.

Hypothesis 2: Each test question shall be correctly answered by more students from both courses in the post-test than in the pre-test.

The Answer Management part of MU LMS includes a list of all questions used and shows the number of correct, incorrect and unanswered questions and the number of students who answered them. The numbers were collected according to the set of questions in which each question was included, e.g. the number of correct answers shown in Table 2 was counted and represents the number of students who correctly answered questions from the set of questions on the use of Boolean operators. The final calculation was transformed into a percentage figure.

Hypothesis 3: There will be no significant difference between the number of medical students and PhD students who correctly answer the questions in the post-test.

This hypothesis was tested through comparison of the average percentage of students from both courses who correctly answered the questions on topics taught in both courses.

Results

Table 3 shows that most of the medical students (average 95.5%) as well as PhD students (average 92.5%) increased their knowledge each semester. An average of 46% of the medical students and 47% of the PhD students increased their score by 20–39% in the post-test, while an average of 36% of medical students and 42% of PhD students increased their knowledge by 1–19%. However, some students answered more questions incorrectly in the post-test than in the pre-test: an average of 4.5% of medical students and an average of 7.5% of PhD students earned lower score in the post-test than in the pre-test.

Table 3. Percentage of students in each semester in relation to the percentage rate of change in their knowledge

|

|

Medical students (%) |

PhD students (%) |

||

|

Rate of change |

Autumn 2012 |

Spring 2013 |

Autumn 2012 |

Spring 2013 |

|

60 and more |

0 |

0 |

0 |

2 |

|

40–59 |

12 |

14 |

4 |

2 |

|

20–39 |

46 |

47 |

46 |

48 |

|

1–19 |

41 |

31 |

42 |

41 |

|

0 |

0 |

0 |

0 |

0 |

|

deterioration |

1 |

8 |

8 |

7 |

In both semesters, more medical students correctly answered the post-test questions than in the pre-test (Table 4), that is 80–99% of the medical students. Certain questions (library services, wildcards, publication ethics, citation ethics, citation methods or bibliographic references) were correctly answered in the post-test by 56–79% of the medical students of the autumn and spring semesters. This is in contrast to 26–55% of the medical students from the autumn and spring semesters who correctly answered questions on the types of resources and database services.

Both groups of PhD students also answered more questions correctly in the post-test than in the pre-test (Table 4), that is between 80–100% of the PhD students. Between 63-79% of the PhD students from both semesters scored higher in the post-test than in the pretest on questions about defining keywords, wildcards, catalogue, publication ethics (first question on this topic), citation ethics (first question), citation methods, reference managers (first question) and scientometry. This is in contrast to 27–53% of the PhD students from both semesters who correctly answered in the post-test questions on database services, citation ethics (second question) and bibliographic references (second question).

Table 4. The percentage of medical and PhD students from autumn 2012 and spring 2013 responding correctly in the pre-test and post-test ( x means the question was not included in the test)

|

|

Medical students (%) |

PhD students (%) |

||||||||||

|

Autumn 2012 (n=81) |

Spring 2013 (n=78) |

Autumn 2012 (n=48) |

Spring 2013 (n=44) |

|||||||||

|

Pre-test |

Post-test |

+- |

Pre-test |

Post-test |

+- |

Pre-test |

Post-test |

+- |

Pre-test |

Post-test |

+- |

|

|

Library services |

46 |

69 |

23 |

58 |

76 |

18 |

x |

x |

x |

x |

x |

x |

|

Search |

69 |

95 |

26 |

62 |

96 |

34 |

88 |

94 |

6 |

70 |

95 |

25 |

|

Evaluation of website quality |

40 |

76 |

36 |

33 |

80 |

47 |

x |

x |

x |

x |

x |

x |

|

Evaluation of website quality |

87 |

95 |

8 |

86 |

95 |

9 |

x |

x |

x |

x |

x |

x |

|

Types of resources |

68 |

32 |

-36 |

79 |

45 |

-34 |

x |

x |

x |

x |

x |

x |

|

Defining keywords |

53 |

99 |

46 |

97 |

95 |

-2 |

68 |

91 |

23 |

97 |

98 |

1 |

|

Defining keywords |

69 |

48 |

-21 |

64 |

55 |

-9 |

81 |

74 |

-7 |

70 |

74 |

4 |

|

Wildcards |

66 |

74 |

8 |

59 |

81 |

22 |

81 |

71 |

-10 |

70 |

78 |

8 |

|

Quotation marks |

87 |

96 |

9 |

77 |

88 |

11 |

74 |

100 |

26 |

77 |

100 |

23 |

|

Catalogue |

65 |

88 |

23 |

64 |

79 |

15 |

65 |

76 |

11 |

73 |

89 |

16 |

|

Catalogue |

68 |

78 |

10 |

79 |

83 |

4 |

71 |

63 |

-8 |

71 |

63 |

-8 |

|

Portal of electronic resources |

35 |

86 |

51 |

45 |

92 |

47 |

50 |

91 |

41 |

58 |

93 |

35 |

|

Remote access |

17 |

81 |

64 |

15 |

74 |

59 |

x |

x |

x |

x |

x |

x |

|

Link service |

53 |

99 |

46 |

46 |

99 |

53 |

67 |

81 |

14 |

58 |

91 |

33 |

|

Types of resources |

68 |

84 |

16 |

64 |

79 |

15 |

x |

x |

x |

x |

x |

x |

|

Database services |

13 |

26 |

13 |

6 |

30 |

24 |

19 |

49 |

30 |

95 |

53 |

-42 |

|

Abstract/Annotation |

67 |

47 |

-20 |

63 |

45 |

-18 |

x |

x |

x |

x |

x |

x |

|

Text format |

80 |

83 |

3 |

88 |

77 |

-11 |

x |

x |

x |

x |

x |

x |

|

Structure of thesis/paper |

61 |

86 |

25 |

64 |

77 |

13 |

70 |

92 |

22 |

64 |

93 |

29 |

|

Publication ethics |

68 |

71 |

3 |

77 |

76 |

-1 |

85 |

67 |

-18 |

90 |

71 |

-19 |

|

Publication ethics |

64 |

98 |

34 |

65 |

93 |

28 |

73 |

91 |

18 |

47 |

100 |

53 |

|

Publication ethics |

94 |

97 |

3 |

97 |

98 |

1 |

98 |

96 |

-2 |

62 |

91 |

29 |

|

Citation ethics |

49 |

71 |

22 |

56 |

76 |

20 |

58 |

67 |

9 |

18 |

71 |

53 |

|

Citation ethics |

9 |

56 |

47 |

15 |

64 |

49 |

16 |

40 |

24 |

52 |

27 |

-25 |

|

Citation methods |

35 |

64 |

29 |

37 |

69 |

32 |

46 |

77 |

31 |

70 |

63 |

-7 |

|

Bibliographic references |

68 |

78 |

10 |

69 |

87 |

18 |

88 |

81 |

-7 |

35 |

79 |

44 |

|

Bibliographic references |

32 |

73 |

41 |

29 |

82 |

53 |

56 |

53 |

-3 |

31 |

42 |

11 |

|

Reference managers |

18 |

78 |

60 |

29 |

81 |

52 |

38 |

73 |

35 |

74 |

77 |

3 |

|

Reference managers |

67 |

97 |

30 |

58 |

99 |

41 |

64 |

100 |

36 |

27 |

97 |

70 |

|

Scientometry |

20 |

86 |

66 |

24 |

82 |

58 |

42 |

72 |

30 |

41 |

65 |

24 |

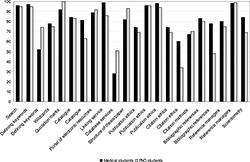

Figure 1 shows the comparison between the average number of medical and PhD students who correctly answered the post-test questions. The figure shows that 15 of the 22 questions common to both groups of students were correctly answered in a higher percentage by medical students than by the PhD students. Only one set of questions, on publication ethics, was answered correctly by the same number of students from both groups. The other 6 questions were answered correctly by a higher number of PhD students than by the medical students. Questions on search, quotation marks, portal of electronic resources, link services, publication ethics (the second and third questions are about this topic) and reference managers (second question) were correctly answered in the post-test by 96–98% of the medical and PhD students. In the post-test, 77–88% of the students from both courses correctly answered questions on the use of wildcards, catalogue (first question), the structure of thesis/scientific paper, bibliographic references, citation ethics and reference managers (first question in all these cases) and scientometry. In the post-test, 63–72 % of all students correctly answered the remaining questions with the exception of questions on database services and citation ethics (second question), which were correctly answered in the post-test by less than half of all students (40–47 %).

Discussion

Even though all students completed the pre-test and post-test questions without any assistance, there are two objective reasons to believe that all students passed the tests responsibly without cheating. Firstly, the average time of completion of tests by all students was 16 minutes, the median was 12 minutes (MU LMS ROPOT saved the date and time when the tests were opened and saved and the MUCL librarian counted the time taken). This proves that even though the test results had no influence on the final classification, it seems that the students avoided selecting random answers to quickly complete the test and rather spent some time reading the questions and answering them. Secondly, answers to randomly generated test questions showed the students really tried to choose the correct answer. This was especially evident in the questions where the students had to correctly sort the parts of a scientific paper or theses and the effort made was clearly evident. These facts suggest that the results can be considered as reliable.

Referring to the original hypotheses, the results show that all three hypotheses have been almost confirmed. The first hypothesis is confirmed as only 4.5% of the medical students (7 people) on average and only of 7.5% the PhD students (7 people) on average had worse results in the post-test. Although it may seem as surprising that some students had a lower score in the post-test than in the pre-test, according to the previous experience of other researchers it is not an uncommon finding. Whitehurst [12] and Craig and Corrall [5] found that even though the number of students preferring Google as an option for research decreased, some students still used this search engine. Hsieh and Holden [8] mention that a lower number of students correctly answered questions about library catalogue in the post-test than in the pre-test. Byerly et al. [30] found that, in the post-test, a lower number of students showed their ability to ask a librarian for help. Zoellner and colleagues [31] also found lower students' scores to a question on the evaluation of web page quality. Additionally, Stec [32] found that many questions (regardless of the subject) were incorrectly answered more often by students in the post-assessment while Tancheva and colleagues [33] found increase in incorrect answers on the identification of the article year in a bibliographic reference in the post-test.

Even if the number of medical and PhD students with lower score in the post-test is not significant, the possible causes of their results were identified. Several students from both groups incorrectly answered the question on the type of resources. It is very likely that students inadvertently misinterpreted a text from a popular magazine as a text from a scientific journal: the text included the phrase “Some studies suggest ...”, the lack of citation indicated that the text was from a popular magazine. A similar problem could be the cause of low score for questions on database services. The students learned to search for the full-text of an article via a link service and therefore, they have no reason to recognize if some database is only bibliographic or a fulltext. Despite discovering this problem in the PhD students’ classes in the autumn of 2011 and the spring of 2012 and emphasising the difference between Web of Science/Scopus and ScienceDirect/Wiley/SpringerLink, decrease in PhD students’ knowledge during the spring of 2013 was found as well as a low score in the medical students’ results in both semesters.

Consequently, exercises requiring students to find on Web of Science and Scopus an article the full text of which is not available via the linking service shall be prepared (e.g. at Masaryk University the articles from some open access journals are not linked and can only be found at the journal’s website). In this task, the students will discover that the Web of Science and Scopus are not full text databases and if they are looking for an article there, they will have to find another way of accessing the fulltext.

Inattention is another possible reason for poor results to the question on defining keywords as well as to the question on abstract and annotation. In the first case, students incorrectly choose “binocular, surgery, strabismus, children, adults” instead of “binocular, surgery, strabismus” for the topic “Binocular vision after strabismus surgery of children and adults” and didn’t realize in this case that specifying the words “children” and “adults” is not necessary (in the pre-test they had a different topic with a unique patient group: The problem of urinary incontinence in women). With regards to the difference between abstract and annotation, they didn’t pay attention to the phrase “which generally describes” in the definition of annotation and instead, they thought they were reading the definition of abstract (in the pre-test the question on abstract included the phrase “which describes in detail”). Inattention could also be the reason for the low score in the PhD students’s answers on using the catalogue because for the question “In the library catalogue you will find books about neurosurgery by” they chose “keyword” instead of “subject heading”.

However, these mistakes could have been avoided if the questions were constructed differently. To educate the students on keywords in the future, much more examples of topics and their search queries with online study materials and in-class presentations in order for the students to realize how to proceed in various situations will have to be included. In the in-class lessons, the semantic difference between the terms “keyword” and “subject heading” will also have to be further emphasised and some searches showing this difference will have to be prepared. With regards to abstract and annotation, the students will have to be encouraged to recognize the abstract and/or annotation feature based on a sample text instead of merely the definition.

The low score concerning questions on publication and citation ethics was also due to unsuitable question construction. The students were asked to indentify a citation style in a list comprising various names without realizing that thousands of citation styles exist, and that PhD students need not know all of them (the post-test question included a real name “Chicago Style” and two fictitious names “Cascading Style Sheets” and “Blue Style”, while the pre-test question “which of the following citation styles is fictious” included two well-known names “ISO 690” and “Turabian Style” and one fictious name “Cascading Style Sheets”). Therefore, the question checking knowledge about citation styles usually used in medical journals will have to be changed (e.g. the student will select from the possibilities ACS style, NLM style or MLA style) because medical students and PhD students are taught on it in lectures or during tasks.

However, it should be noted that despite the failings discussed above almost all students from both groups showed increase in knowledge. As Hsieh and Holden [8] writes, “test question construction is almost as much an art as science” and there will always be the possibility of some student misunderstanding a question. The decrease in knowledge shown by some students could be also caused, as Hsieh and Holden [8] mention, by the lack of motivation for students to remember the knowledge gained in the courses. This could be the case with medical and PhD students who know that online study materials will always be available and updated at the MUCL website. In the light of all this, even if the first hypothesis hasn’t been confirmed, increase in knowledge is significantly related to e-learning and in-class teaching.

The fact that an average of 36% of the medical students and 42% of the PhD students have increased their knowledge by only 1-19% needs further discussion in view of the results relating to the second hypothesis. Table 4 shows that almost the same average number of Medical and PhD students correctly answered the questions in the pre-tests, while in the post-tests the medical students outnumbered the PhD students in correct answers by 13%. Figure 1 shows that the medical students also correctly answered more questions. These results confirm the second hypothesis and show that e-learning can also be an effective teaching method. The results are not regarded as proof that e-learning is more effective than teaching, but lend credence to the notion that information education based e-learning can be applied. It should be noted that several studies demonstrated to the contrary: slightly higher effectiveness of in-class lessons. Nichols and his colleagues [9] found no significant percentage of in-class students had higher scores than online students, where the “difference was less than one half of one question out of twenty”. Several years later, at the same university, Shaffer [11] again verified the effectiveness of e-learning, confirming the previous results and finding no significant difference between the students’ answers on their competence in navigating the library website, obtaining full text of articles or searching for documents. Salisbury and Ellis [10] compared the results between groups completing IL lessons as hands-on computer-based sessions in a joint classroom presentation with demonstration from an instructor and e-learning. They found that 9% more students from the latter group were able to recognise journal citations, 4–5% more students from the hands-on group were able to recognise and search for journal article citations and to search using Boolean operators than the other groups. Similar results were recorded at the University of Central Florida [34] where no significant differences in library skills were found between groups of students who had had in-class lessons with F2F instruction or web-based tutorial and groups who had had only web-based class. As already mentioned, the studies describe no significant differences and in view of the MUCL results, e-learning can be considered an effective alternative to in-class teaching. The main possible reason for the higher results by the medical students is the number of tasks (activities in table 1) which they have to complete on their own while the PhD students can immediately consult a MUCL librarian. As was mentioned above, it may reduce the PhD students’ motivation to remember the relevant knowledge.

It is unclear, however, if the third hypothesis citing that there would be no significant difference between the number of medical and PhD students who correctly answered the questions in the post-test can be regarded as confirmed when there is a 13% difference between the average numbers of medical and PhD students who correctly answered the post-test questions. This 13% difference could be considered as significant even if this number seems low in the context of the previous discussion and even though a similar average number of the medical (54%) and PhD (51%) students correctly answered in the pretest. However, there are several studies finding similar differences to be minor. Anderson and May [35] evaluated their results with the understanding that some students could have obtained some IL skills in a previous course, and found no significant difference in knowledge between the students who completed IL instruction in online, blended or F2F form. Time and again, no significant difference has been found at the State University of New York at Oswego [9,11] as well as at the Oakland University [36], the University of South Florida [37], or the University of Melbourne [10]. These studies describe the experiences where a slight difference in favour of in-class teaching was recorded while at the University-Purdue University Indianapolis [38], a slight difference in favour of online tutorials has been found. However, a significant difference in favour of an online course has been found at the University of Arizona [39]. There librarians have concluded that teaching courses online is better than a one-off IL lesson since in the online course, students “have multiple opportunities to engage with information literacy concepts that they can apply in their […] courses”.

These experiences suggest that the difference between the average number of medical and PhD students correctly answering post-test questions can be also taken as not being significant and then the third hypothesis can be regarded as confirmed. However, the rate of PhD students knowledge increase shows the need for changes to improve the efficiency of in-class instructions. Therefore, PhD students will be required to accomplish tasks on searching for articles the full text of which is not available via the linking service, creating bibliographic references manually and via Zotero which are the main skills they will use for their scientific writing.

A comparison of the results of measuring the impact of courses in e-learning and in-class form on the rate of medical and PhD students’ knowledge shows the real possibility of using e-learning in IL activities. As mentioned above, these results should be taken as proof that e-learning can be used in information literacy education. The test results shall enable MUCL to transform the DSVIz01 course. If the autumn 2013 and spring 2014 test results also confirm the effectiveness of e-learning, then from autumn 2014 the DSVIz01 course will be offered as in-class lessons only to PhD students prefering this type of learning while others would complete the course through e-learning. It could increase the number of course participiants without implicating demands on the time spent teaching. This is an unquestionable benefit due to the fact that Czech librarians organize IL activities alongside their library work.

From autumn 2013, the results of pretests will be used to emphasise topics which fewer students answered correctly. Despite the practical implications already mentioned in the discussion, a record of the MUCL IL lessons and short videos showing the various practical tips and tricks that could help the students refresh their knowledge will be captured. Although the results discussed in this paper relate mainly to theoretical knowledge, measuring the rate of acquired practical skills will also be more closely focused upon.

The comparison presented in this paper also suggests the possibility of simple evaluation of teaching effectiveness for which no complicated tools are necessary and which could be realized using any survey tool. This opens up the possibility for libraries with financial or technical limitations to conduct their own research, the results of which could accent their important role in the fostering of an information literate society. However, each librarian considering undertaking their own measurment should carefully prepare test questions because, as the experiences detailed above show, even one overlooked word can lead a student to incorrect answers.

The results also showed no significant difference between the students from both groups at the start of courses, which confirms Conway’s [29] opinion on the viability of comparing undegraduate and postgraduate students. This finding also demonstrates the necessity of holding IL courses for PhD students and confirms the role of the librarian as a professional who is able to educate.

References

1. Pinto M, Cordon JA, Diaz RG. Thirty years of information literacy (1977-2007): A terminological, conceptual and statistical analysis. J Lib Inf Sci 2010; 42(1): 3-19.

2. Bailey P, Derbyshire J, Harding A, Middleton A, Rayson K, Syson L. Assessing the impact of a study skills programme on the academic development of nursing diploma students at Northumbria University, UK. Health Info Libr J 2007; 24(Suppl. 1): 77-85.

3. Barnard A, Nash R, O’Brien M. Information literacy: Developing lifelong skills through nursing education. J Nurs Educ 2005; 44(11): 505-510.

4. Childs S, Blenkinsopp E, Hall A, Walton G. Effective e-learning for health professionals and students - barriers and their solutions. A systematic review of the literature - findings from the HeXL project. Health Info Libr J 2005; 22(Suppl. 2): 20-32.

5. Craig A, Corrall S. Making a difference? Measuring the impact of an information literacy programme for pre-registration nursing students in the UK. Health Info Libr J 2007; 24(2): 118-127.

6. Horton FW. Overview of Information Literacy Resources Worldwide. [Online] UNESCO: Paris 2013 [cited Aug 12, 2013]: 64-69. ISBN 978-92-3-001131-4 . Available at WWW: http://unesdoc.unesco.org/images/0021/002196/219667e.pdf.

7. Emmett A, Emde J. Assessing information literacy skills using the ACRL standards as a guide. Ref Serv Rev 2007; 35(2): 210-229.

8. Hsieh ML, Holden HA. The effectiveness of a university’s single-session information literacy instruction. Ref Serv Rev 2010; 38(3): 458-473.

9. Nichols J, Shaffer B, Shockey K. Changing the face of instruction: Is online or in-class more effective? Coll Res Lib 2003; 64(5): 378-388.

10. Salisbury F, Ellis J. Online and face-to-face: Evaluating methods for teaching information literacy skills to undergraduate arts students. Lib Rev 2003; 52(5): 209-217.

11. Shaffer BA. Graduate student library research skills: Is online instruction effective? J Lib Inf Serv Distan Learn 2011; 5(1-2): 35-55.

12. Whitehurst A. Information literacy and global readiness: Library involvement can make a world of difference. Behav Soc Sci Lib 2010; 29(3): 207-232.

13. American Educational Research Association. Standardy pro pedagogické a psychologické testování. Testcentrum: Praha 2001.

14. American Library Association. Information Literacy Competency Standards for Higher Education. [Online] Association of College & Research Libraries: Chicago 2000. Available at WWW: http://www.ala.org/acrl/files/standards/standards.pdf.

15. Association of Libraries of Czech Universities. Information Education Strategy at Universities in the Czech Republic: Reference Document of the Association of Libraries of Czech Universities. [Online]. Available at WWW: http://www.ivig.cz/en-koncepce.pdf.

16. Kratochvíl J, Sejk P. Získávání a zpracování vědeckých informací: pracovní sešit. Masarykova univerzita: Brno 2011.

17. Kratochvíl J. Evaluation of e-learning course, Information Literacy, for medical students. Electron Lib 2013; 31(1): 55-69.

18. Blanchett H, Powis C, Webb J. A Guide to Teaching Information Literacy: 101 Practical Tips. Facet: London 2012.

19. Walsh A. Information literacy assessment: Where do we start? J Lib Inf Sci 2009; 41(1): 19-28.

20. Mackey TP, Jacobson TE (eds). Collaborative Information Literacy Assessments: Strategies for Evaluating Teaching and Learning. Facet Publishing: London 2010.

21. Mulherrin EA, Abdul-Hamid H. The evolution of a testing tool for measuring undergraduate information literacy skills in the online environment. Commun Inf Lit 2009; 3(2): 204-215.

22. O’Neil CA, Fisher CA. Assessment and Evaluation of Online Learning. In: O’Neil CA, Fisher CA, Newbold SK (eds). Developing Online Learning Environments in Nursing Education. [Online] Springer Publishing Company: New York 2009 [cited Aug 13, 2013]. Available at WWW: site.ebrary.com/lib/masaryk/docDetail.action?docID=10265302&p00.

23. Radcliff CJ, Jensen ML, Salem JA, Burhanna KJ, Gedeon JA. A Practical Guide To Information Literacy Assessment For Academic Librarians. Libraries Unlimited: Westport 2007.

24. Schilling K, Applegate R. Best methods for evaluating educational impact: A comparison of the efficacy of commonly used measures of library instruction. J Med Libr Assoc 2012; 100(4): 258-269.

25. O’Connor LG, Radcliff CJ, Gedeon JA. Applying systems design and item response theory to the problem of measuring information literacy skills. Coll Res Lib 2002; 63(6): 528-543.

26. Staley SM, Branch NA, Hewitt TL. Standardised library instruction assessment: An institution-specific approach. Inf Res 2010; 15(3): 436.

27. Hodgens C, Sendall MC, Evans L. Post-graduate health promotion students assess their information literacy. Ref Serv Rev 2012; 40(3): 408-422.

28. Conway K. How prepared are students for postgraduate study? A comparison of the information literacy skills of commencing undergraduate and postgraduate information studies students at Curtin University. Aust Acad Res Lib 2011; 42(2): 121-135.

29. Jackson PA. Plagiarism instruction online: Assessing undergraduate students’ ability to avoid plagiarism. Coll Res Lib 2006; 67(5): 418-428.

30. Byerly G, Downey A, Ramin L. Footholds and foundations: Setting freshmen on the path to lifelong learning. Ref Serv Rev 2006; 34(4): 589-598.

31. Zoellner K, Samson S, Hines S. Continuing assessment of library instruction to undergraduates: A general education course survey research project. Coll Res Lib 2008; 69(4): 370-383.

32. Stec EM. Using best practices: librarians, graduate students and instruction. Reference Services Review. 2006; 34(1): 97-116.

33. Tancheva K, Andrews C, Steinhart G. Library instruction assessment in academic libraries. Public Serv Q 2007;3(1-2): 29-56.

34. Beile PM, Boote DN. Does the medium matter?: A comparison of a Web-based tutorial with face-to-face library instruction on education students’ self-efficacy levels and learning outcomes. Res Strateg 2004; 20(1): 57-68.

35. Anderson K, May FA. Does the method of instruction matter? An experimental examination of information literacy instruction in the online, blended, and face-to-face classrooms. J Acad Librarianship 2010; 36(6): 495-500.

36. Kraemer EW, Lombardo SV, Lepkowski FJ. The librarian, the machine, or a little of both: A comparative study of three information literacy pedagogies at Oakland University. Coll Res Lib 2007; 68(4): 330-342.

37. Silver SL, Nickel LT. Are online tutorials effective? A comparison of online and classroom library instruction methods. Res Strateg 2005; 20(4): 389-396.

38. Orme WA. A study of the residual impact of the Texas information literacy tutorial on the information-seeking ability of first year college students. Coll Res Lib 2004; 65(3): 205-215.

39. Mery Y, Newby J, Peng K. Why one-shot information literacy sessions are not the future of instruction: A case for online credit courses. Coll Res Lib 2012; 73(4): 366-377.

Please cite as:

Kratochvíl J. Measuring the impact of information literacy e-learning and in-class courses via pre-tests and post-test at the Faculty of Medicine, Masaryk University. MEFANET Journal 2014; 2(2): 41-50. Available at WWW: http://mj.mefanet.cz/mj-03140210.

This is an open-access article distributed under the terms of the Creative Commons Attribution-NonCommercial-ShareAlike 3.0 License (http://creativecommons.org/licenses/by-nc-sa/3.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work, first published in the MEFANET Journal, is properly cited. The complete bibliographic information, a link to the original publication on http://www.mj.mefanet.cz/, as well as this copyright and license information must be included.