MEFANET Journal 2014; 2(1): 20-25

ORIGINAL ARTICLE

A qualitative evaluation of University of Cape Town medical students’ feedback of the Objective Structured Clinical Examination

Nazlie Beckett, Derek Adriaan Hellenberg, Mosedi Namane

Division of Family Medicine, School of Public Health and Family Medicine, University of Cape Town, South Africa

* Corresponding author: nazlie.beckett@uct.ac.za

Abstract

Article history:

Received 10 March 2014

Revised 5 June 2014

Accepted 23 June 2014

Available online 26 June 2014

Peer review:

Martin Vejražka, Simon Wilkinson

Download PDF

Supplementary material

Background: All medical students at the University of Cape Town (UCT) rotate through a Family Medicine clerkship during their final year. Students are based at community health centres (CHCs) in the Western Cape Metropole, and at a rural site in Vredenburg. At the end of the four week clerkship, students do an Objective Structured Clinical Examination (OSCE).

Aim: The purpose of this study is to evaluate the students’ feedback of the OSCE at the end of the 6th year Family Medicine rotation, and to make recommendations which can be used to improve the OSCE.

Methods: This is a structured qualitative study. The study population included final year medical students rotating through the Family Medicine clerkship, over a period of seven months. Each student completed a structured questionnaire immediately after the OSCE. These evaluations were analysed using a “content analysis” method.

Results: The majority of students were happy with the structure and content of the OSCE, as well as the fact that it was aligned to what was taught during the clinical rotation. However, the majority of students complained that the time allocated per station was inadequate.

Conclusion: Objective ways should be utilized by the Division of Family Medicine to improve the time allocation and the current format of the OSCE.

Keywords

OSCE; new medical curriculum; University Of Cape Town; South Africa

Introduction

The OSCE was first used to assess clinical competence in 1975. It has been used as both a formative and a summative assessment. The success of the OSCE depends on adequate resources such as time and money, as well as the number and content of the stations [1]. It traditionally consists of a series of stations, and each station is designed to assess a specific competency using standardised peer-reviewed checklists [2]. The OSCE is a reliable and effective multi-station test which is used to assess practical skills, the demonstration of applied knowledge as well as communication skills. It is organized in the form of a number of stations, usually 10–20 stations (more stations improve reliability), through which students have to rotate [3]. The stations are timed, usually ranging from 3 to 15 minutes per station [3]. The Accreditation Council for Graduate Medical Education (ACGME) recommends a time of 10–15 minutes per station. By giving more time, you are able to test more competencies associated with the task [2]. Each station is focussed on testing a particular skill e.g. history-taking, interpretation of test results, and the students are marked against prepared check lists. The stations which focus on performance of procedures are “manned”, and these can constitute up to 6–10 stations in an OSCE. The “unmanned” stations are written stations where answers are set down on answer sheets, and these are also marked against checklists at the end of the examination [3]. A study done by Prislin et al. in 1998 [4] showed that the OSCE tested students’ competency skills. Khurseed et al. [1] conducted a study amongst third year medical students at a University in Karachi, to evaluate undergraduate students’ perceptions of the OSCE. It was found that the majority of students regarded the OSCE as a practical and useful assessment tool. Students felt that some stations offered ambiguous instructions and time allocation was not enough for the assigned tasks. The students gave constructive feedback on the structure and organization of the OSCE and the overall feedback from that study was used to review that OSCE process.

All UCT medical students do a 4 week Family Medicine clerkship which is integrated with Palliative Medicine during their final year. There are 10 rotations per year comprising of 16–20 students each. A subgroup of about 4 students is allocated to a 24-hour Community Health Centre (CHC) (either at Retreat, Hanover Park, Mitchell’s Plain, or Vanguard CHC in the Metro West Geographical Service Area, GSA) of Cape Town. There is also an option for an additional group to do a voluntary rural rotation in Vredenburg in the Saldanha Bay Sub-district on the West Coast, around 160 kilometres from Cape Town. The student base is located at the Vredenburg District Hospital and students also see patients at the Hannah Coetzee CHC as well as at some clinics and non-governmental organizations (NGOs). During their clerkship, students are required to clerk patients with a broad range of presenting complaints. They also participate in a group community project, as well as having to write up individual case studies for Family Medicine and Palliative Medicine. Each of these sites has a senior family physician who supervises the students. On the last day of the clerkship, the students sit for an OSCE examination, which is used to assess core learning outcomes and skills learnt during the clerkship. The outcomes are built on the concept of “spiral learning” that is carried forward from pre-clinical years. The OSCE consists of 16–18 stations, and is a combination of written questions, practical skills and communication skills. Each station is 6 minutes long (with some being preparation stations). The purpose of this research was to evaluate the students’ feedback of the OSCE at the end of the 6th year Family Medicine rotation, and to make recommendations which can be used to improve the OSCE.

Methodology

The study population consisted of all final year medical students rotating through the family medicine clerkship over a period of 7 months. There are 18–20 students in each block and the participation rate was expected to be more than 80%, because the questionnaire was completed immediately after the OSCE. The study was initially set for a period of one year (ten rotations), but due to time constraints it was reduced to seven blocks. The student rotations are all the same, and the OSCE structure is similar throughout the year, with 14 stations consisting of knowledge and procedural skills and 2 stations of communication skills.

A structured pretested questionnaire was used, adapted from an existing one used by the assessment team in 2011(see Supplementary material). The questionnaire consisted of a combination of open-ended questions, which were based on structure, time management and content of the OSCE.

Informed consent was obtained from the students at the start of the rotation and the questionnaires were anonymously completed by each participant at the end of the block.

The questionnaire consisted of seven questions (Supplementary material), most of which had single answers. A content analysis was done manually by the principal author for all the questions and the responses were analysed for common themes. The comments for each question was listed, and grouped into categories based on a common theme. The term “reasonable structure” is an example of a common theme, and included comments such as “good, fair, well organized adequate and excellent”. Some responses did not lend itself to further analysis, while others included comments, some of which were used to formulate recommendations. The two other authors analysed the results in the same way as described above, using content analysis, and collaborated both personally or by email with the principal author. The comments for each question were grouped into common themes. The number of responses for each theme was captured in a table on an Excel spreadsheet and transposed into figures. The last question asked students to make suggestions as to how the OSCE could be improved. These suggestions were discussed with three other UCT associated family physicians and final recommendations on how to improve the OSCE were formulated.

Ethics approval for the study was obtained from the Human Research Ethics Committee of the Faculty of Health Sciences at the University Of Cape Town (HREC REF: 105/2012). Funding was provided by a URC start-up grant, which is offered to new UCT academic staff.

Limitations of the study

The research period of the study was intended to be over a period of one year (ten rotations), but due to time constraints this was reduced to seven rotations. The questionnaire was completed immediately after the OSCE, so some students were a bit stressed and this may have influenced their responses. Not all students completed the questionnaire.

Results

The response rate to questionnaires was 95 out of a total student throughput of 126 (75%).

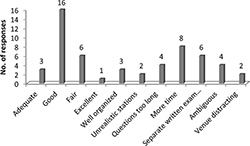

“What did you think of the structure of the OSCE (written stations vs. clinical stations)?”

Fifty five students (58%) responded to this question (Figure 1). Twenty nine of these students (53%) felt that the OSCE had a reasonable structure (adequate, good, fair, excellent, good balance of written and clinical stations or well organized). Examples of comments were: “Well organized – a good balance of written and clinical stations” and “Good structure and balance”.

Six students (11%) suggested that the written parts in the OSCE should be housed in a separate written examination. An example of a comment was: “I think the written stations should have been a written exam.” Four students (7%) complained that some of the written stations were ambiguous. An example of a comment was: “The written stations were often badly worded/confusing. Eight students (15%) said that more time was needed. Examples: “ECG station – not enough time” and “Found it quite rushed and time pressured”. Four students (7%) commented that the questions were too long: “The time was sufficient for answering the questions, but the scenarios for the written questions were too long!” Two students (4%) thought that the venue was distracting: “A bit distracting when you can hear others talking while you are writing”.

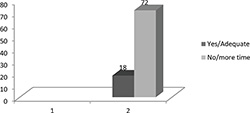

“Was the time allocated fair/time management adequate for the OSCE stations?”

A total number of ninety students (94%) responded to this question (Figure 2). The replies were mostly “yes” or “no”. Examples of statements categorized as “yes” were “Time allocation was fair” and “Time was appropriate” and those who said “no” was “Not enough time” and “Pressured for time”.

Seventy two of these students (80%) stated that the time allocation for the OSCE was inadequate. Examples: “Not enough time for some stations” and “Some questions too long and needed more time”. Four students (4%) thought that the Evidence-Based Practice Simulated Office Oral (EBP SOO) and the Communication Skills station needed more time. Example: “The live stations (EBP and Motivational Interviewing) needed more time”, while 14 students (16%) said that more time should be allocated for the written stations. “Need more than 6 minutes for written stations” and “palliative care stations needed more time” was a few examples. The general response for time allocation was that they needed eight to ten minutes per station, instead of the allocated 6 minutes. Examples of this were “Not enough time – need 8–10 minutes per station” and “Need more time – 6 minutes too short for some questions”. Only eighteen students (20%) agreed that the allocated time was adequate.

“Did the questions/OSCE stations cover the content of the block adequately (i.e., Family Medicine and Palliative Care)?”

Eighty-one students (85%) responded to this question. The responses were mostly either “yes’ or “no”.

Sixty-eight of these students (84%) said that the OSCE content was adequately covered during the block. “Yes, but the detail was more than we were exposed to” and “yes, but there is a lot of self-directed studying” were a few of the comments which were included in this category. Thirteen students (16%) felt that the OSCE content did not adequately cover what was taught in the block. “No, it depended on what you saw at the clinic” and “No, it felt like an examination draining all knowledge of 6th year, rather than applied family medicine knowledge” were a couple of comments.

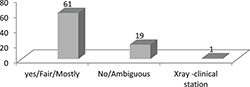

“Was the format easy to follow?”

Eighty-one students (85%) responded to this question (Figure 3). The answers were mostly either “yes” or “no”. Comments such as “fair” and “mostly” were included in the “yes” category.

Sixty-one of these students (75%) agreed that the format of the OSCE was good. Nineteen students (24%) said that the format was not good. This included the students who said “no”, as well as the “ambiguous” category. “No, some questions were difficult to understand” was one comment. “No, the palliative care questions were ambiguous” and “The written questions were too detailed with too much to read” were other comments. One student (1%) suggested that certain written stations, for example on X-rays could be a clinical station.

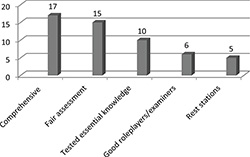

“What did you like about the OSCE?”

Fifty three students (56%) responded to this question. The responses were grouped into five categories, based on common themes (Figure 4). “Comprehensive” included comments such as “covered core knowledge, tested a wide variety of knowledge, good coverage, relevant stuff and relevant knowledge”. “Fair assessment” included comments such as “fair test, asked what was expected/ taught, and not trying to trick us”.

Seventeen of these students (32%) said that the OSCE was comprehensive and covered a broad range of topics and knowledge. Examples of some comments were “Questions were asking core knowledge” and “testing a wide variety of knowledge”. Fifteen students (28%) thought that it was a fair assessment. “They asked us exactly what we were taught – the department is not trying to trick us” was one comment. Ten students (19%) said that essential knowledge was tested. “It covers relevant stuff”, “Tt felt like an actual office environment and realistic scenarios we would face one day” were a few comments. Six students (12%) liked the examiners and role players. “Examiners polite and easy to work with” and “good role players” were a couple of comments. Five students (9%) liked the rest stations. “Time between stations was useful” was one comment.

“What did you not like about the OSCE?”

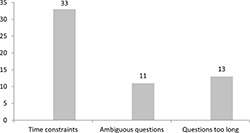

Fifty-seven students (60%) responded to this question (Figure 5). The responses were categorised into three identifiable themes: Time constraints, Ambiguous questions and Questions too long.

Thirty-three of these students (58%) said that there was not enough time to answer the questions or complete the stations. “Time allocation unrealistic”, “Time allocation at live stations was not fair” and “6 min is too short for patient counselling” were a few comments. Eleven of these students (19%) felt that some questions were ambiguous. Thirteen students (23%) said the questions were too long “The clinical stations were ambiguous” and”I felt rushed and confused” were a few of the comments.

“How the OSCE can be improved? Any recommendations?”

Sixty-six students (69%) responded to this question.

Thirty-five of these students (53%) suggested that the time allocation should be increased and this suggestion ranged from 8–15 minutes per station. Five students (8%) suggested that the written stations should be a separate two-hour examination. Two students (3%) suggested that there should be more live stations and that these should be isolated from the written stations. Four students (6%) suggested that sweets should be provided at stations, or that students should bring lunch. Four students (6%) suggested that the OSCE should remain as it is, but the number of stations should be reduced. One student (2%) wanted two evidence-based stations (EBP SOO). Five students (8%) said that some of the longer written questions should be reduced to short answer questions. Five students (8%) found the sequencing of stations distracting and suggested that live stations be more isolated. Two students (3%) wanted a live station and more practical teaching for Palliative care, and one student (2%) requested feedback after the examination. One student (2%) suggested that calculators should be used and one student (2%) suggested a box instead of envelopes for the collection of scripts. “More practical teaching in Palliative Medicine is needed” “A live palliative medicine station would be an idea” and “We want feedback on our answers” were a few of the comments.

Discussion

The response rate to the questionnaires was 95 out of a total student throughput of 126. This is a 75% response rate which is less than the 80% expected. The reason for this response rate is possibly due to the fact that the evaluations forms were completed immediately after the OSCE and some students may have chosen not to participate.

The results showed that the quality of the OSCE in terms of format (length of questions), structure (ambiguity of questions) and time management was not ideal and that certain improvements could be made. Approximately 10% of students felt that the written questions should be in a separate two-hour examination and the clinical stations should consist of real patients instead of role-players. Real patients would be possible at the procedural skills stations, but the “live” communication skills would be problematic because it would jeopardise the “standardised patient” concept. However, this could be overcome if external role-players were specifically trained for the OSCE. According to the literature, well trained Standardised Role players (SPs) can be used for communication skills stations, and contributes to the reliability of the examination by ensuring that all students are presented with the same challenge [5].

Time management of the OSCE was mentioned as a problem. Eighty percent of the students thought that the time allocation of 6 minutes per station was not sufficient and that more time was needed to complete some stations (Figure 2). This corresponded to similar findings in the study done by Khurseed et al. in 2007 [1]. Only 20 % of the students thought that the time allocation per station was adequate. Rafique and Rafique conducted a study in 2013, where they evaluated the students’ feedback of the OSCE as one of the assessment methods used at Nishtar College in Multan, Pakistan [6]. The results from their study showed that students thought the time per station was insufficient and this concurs with the findings of this study. The problem of inadequate time per station was discussed at the sixth year review meeting and a decision was made to increase the OSCE time allocation to 7 minutes per station.

Most of the students (84%) thought that the OSCE content was aligned to what was taught during the rotation at the various sites, for both Palliative Medicine and Family Medicine. These perceptions can be compared to similar findings by Siddique in 2013, where research results showed that 53% of students agreed that the OSCE tasks were taught during clinical rotations [7]. This emphasises the importance of using a blue-print to plan the content of the OSCE.

Two students also mentioned that self-study was required and that some questions required more detail than what they were exposed to at the clinics. Only 16% of the students did not think that the OSCE content was adequately covered during the rotation. One student thought that the OSCE was not a good application of Family Medicine knowledge. These last comments could indicate that spiral learning is lost over the years and that students did not fully understand the concept of a 6th year student internship year.

The format of the OSCE in terms of the type of questions and the structuring of the questions at the clinical and written stations was generally accepted as good or fair (75%). However, 24 % of the students thought that certain questions were difficult to understand and that some Palliative Medicine questions were ambiguous. This ambiguity of questions correlates with the findings of Khurseed et al in 2007 [1]. Similar findings were confirmed in the study done by Siddiqui at the OSCE centre in 2010, where nearly 30% of students said that the OSCE stations were difficult to understand [7]. Thirteen students said that certain questions were too long, contained too much information to be able to read and complete in time. Other suggestions were that some written questions, e.g., the X-ray and ECG questions could be clinical or interactive stations.

The general consensus was that the OSCE was a comprehensive and fair assessment of what was learnt during the block. The majority of students thought the setting felt like a consulting room, especially for the clinical and interactive stations. This is reassuring for the Division, because it indicates that the objective of making the OSCE as real as possible was being met. These findings correlate with student perceptions internationally, at institutions such as King Saud University in Saudi Arabia. Raheel and Naeem conducted a study at this institution in Saudi Arabia, where they assessed students’ perceptions of the OSCE. Their research showed that 52% of students thought that the exam was fair and 57% stated that it evaluated a wide variety of clinical skills [8].

The perceptions of UCT students generally correlate with the findings of similar studies conducted at other institutions.

Students made various suggestions as to how the OSCE could be improved. Time allocation was a problem and based on the students’ recommendations to improve the OSCE, it was suggested that the time per station be increased. Another suggestion was that there should be more practical teaching in Palliative Medicine, and that a “live station” should be incorporated in the OSCE. This is a good suggestion and has been a topic of discussion within the division. Due to the paucity of permanent staff and resources, this has been put on hold, but it is a possibility for the future. It would not be realistic to get a “live” patient, but a role-player could be trained for a specific scenario. The use of Standardised patients (SPs) is recommended in the literature and these can either be staff members or lay persons with some form of acting or medical background [9]. Only six students made the suggestion that the written and clinical examination should be separated. This will not be addressed immediately, unless evaluation in the future demonstrates a problem. The ideal OSCE should consist of mostly clinical stations where procedural and communication skills are tested, and this is planned for the future evolvement of our OSCE. According to the literature, more recently evolved OSCE programmes also include assessment of professionalism, quality improvement and documentation [9]. The Division of Family Medicine has included the latter two aspects in the OSCE, but professionalism is assessed elsewhere. Students wanted immediate feedback on their answers. This is not always feasible, but the family physicians made a suggestion which could address this. These suggestions were discussed, and certain recommendations were made.

Recommendations

It was decided that the time management of the OSCE would be reviewed and the time per station would be increased to 7 minutes. The possibility of making the OSCE purely clinical, as well as the logistics of having another separate written examination (that may include Short-Answer Questions and computer-based MCQs), will be considered. A feedback session can be held after the examination, so that questions identified as being difficult by the majority of students, can be reviewed appropriately by adapting them for future use or removing them from the assessment.

Conclusion

The majority of students were happy with the structure and content of the OSCE, as well as the fact that it was aligned to what was taught during the clinical rotation. However, the majority of students complained that the time allocated per station was inadequate, as shown in the results. The positive comments are reassuring for the Division of Family Medicine and shows that objectives are by and large being met. The time constraints will be addressed as mentioned, by increasing the time allocated to 7 minutes per station. The results showed some correlation that UCT students’ perceptions of the OSCE is similar to that of their international counterparts at other institutions, as evidenced by the literature. The key perceptions which showed some similarity was that the time allocation for OSCE stations was inadequate, and that the OSCE tasks were aligned to what was taught during the clinical rotations.

The understanding of spiral learning is “lost” amongst some students as some responses suggest that they do not expect to be examined on prior learning done during earlier years of study. This misconception should be addressed at orientation. The recommendations drawn from the study findings by UCT Family Physicians will be used to improve the OSCE as from 2014.

Acknowledgements

We would like to thank Dr Liz Gwyther (Palliative Medicine, UCT)), Prof Richard Harding (King’s College, London), Ms Manisha Chavda (Course administrator) as well as all the Family Physicians and tutors within the Division for their valuable contribution towards the course. A special thanks to the class of 2012 final year medical students at UCT, for their willingness to participate in this research study. The study was supported by the University of Cape Town start-up grant.

Conflict of interest

Dr Beckett is the convenor of the sixth year Family Medicine course and this may be seen as a possible conflict of interest.

References

[1] Khurseed I, Usman Y, Usmaan J. Students' feedback of objectively structured clinical examination: a private medical college experience. J Pak Med Assoc 2007; 57(3): 148-150.

[2] Gupta P, Dewan P, Singh T. Objective Structured Clinical Examination (OSCE) Revisited. Indian Pediatr 2010; 47(11): 911-920.

[3] Bhatnager KR, Saoji VA, Banerjee AA. Objective Structured Clinical Examination for undergraduates: is it a feasible approach to standardized assessment in India. Indian J Ophthalmol 2011; 59(3): 211-214.

[4] Prislin MD, Fitzpatrick CF, Lie D, Giglio M, Radecki S, Lewis E. Use of an Objective Structured Clinical examination in evaluating student performance. Fam Med 1998; 30(5): 338-344.

[5] Boursicot K, Roberts T. How to set up an OSCE. Clinical Teacher 2005; 2(1): 16-20.

[6] Rafique S, Rafique H. Students’ feedback on teaching and assessment at Nishtar Medical College, Multan. J Pak Med Assoc 2013; 63(9): 1205-1209.

[7] Siddiqui FG. Final year MBBS students' perception for Observed Structured Clinical Examination. J Coll Physicians Surg Pak 2013; 23(1): 20-24.

[8] Raheel H, Naeem N. Assessing the Objective Structured Clinical Examination: Saudi family medicine undergraduate medical students’ perceptions of the tool. J Pak Med Assoc 2013; 63(10): 1281-1284.

[9] Casey PM, Goepfert AR, Espey EL, Hammoud MM, Kaczmarczyk JM, Katz NT, Neutens JJ, Nuthalapaty FS, Peskin E. To the point: reviews in medical education – the Objective Structured Clinical Examination. Am J Obstet Gynecol 2009; 200(1): 25-34.

Supplementary material

PPH6000W Family Medicine OSCE Questionnaire

- “What did you think of the structure of the OSCE (written stations vs. clinical stations)?”

- “Was the time allocated fair/time management adequate for the OSCE stations?”

- “Did the questions/OSCE stations cover the content of the block adequately (i.e., Family Medicine and Palliative Care)?”

- “Was the format of the questions easy to follow?”

- “What did you like about the OSCE?”

- “What did you not like about the OSCE?”

- “How the OSCE can be improved? Any recommendations?”

Please cite as:

Beckett N, Hellenberg DA, Namane M. A qualitative evaluation of University of Cape Town medical students’ feedback of the Objective Structured Clinical Examination. MEFANET Journal 2014; 2(1): 20-25. Available at WWW: http://mj.mefanet.cz/mj-03140310.

This is an open-access article distributed under the terms of the Creative Commons Attribution-NonCommercial-ShareAlike 3.0 License (http://creativecommons.org/licenses/by-nc-sa/3.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work, first published in the MEFANET Journal, is properly cited. The complete bibliographic information, a link to the original publication on http://www.mj.mefanet.cz/, as well as this copyright and license information must be included.